Every day, more and more data is generated around the world – and the better a company processes this data, the more accurately it understands customer preferences and forecasts trends. Oleksii Segeda, Senior Data Engineer at Mapbox, has been working deeply with Big Data for over a decade. In this interview, he discusses how approaches to data differ across organizations, what it takes to embed data-driven thinking into a business, and how the role of the data engineer is evolving.

Oleksii, you’ve worked at both an international financial institution and a tech product company. How has your view on working with data changed over time?

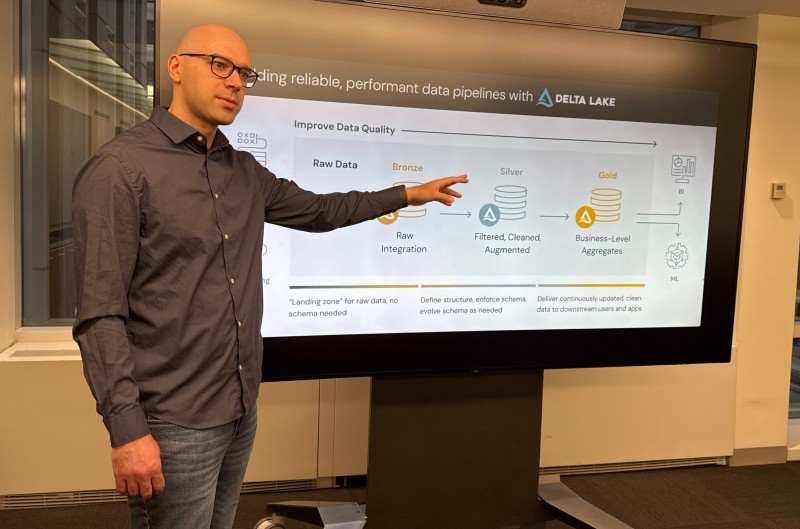

Each industry has its own specifics. For example, in banks and other large organizations, data fragmentation is a serious issue – there’s simply a huge amount of data, scattered across multiple databases. The architecture often includes legacy systems with their own quirks. Before Mapbox, I worked at The World Bank, where one of my projects involved building a unified Data Lake that integrated information from various systems. This system simplified the development of applications that needed access to that data.

Startups and smaller companies don’t usually face that kind of fragmentation – but they often lack the data they need, which slows down project development. You’re left wondering where to find the data, whether to buy it, or generate it yourself.

Another major difference is mindset – especially when it comes to data budgets. For example, in nonprofit organizations focused on global development, the end result is what matters most. Surprisingly, budgets in such cases are often higher than necessary. In commercial companies, budgets are fixed, which means you need to get creative to solve problems while staying within these limits.

I’ve also noticed that smaller commercial companies tend to be more open to data-driven thinking – where decisions are based on actual data. In large corporations, data-driven approaches are sometimes blocked by internal dynamics, where expert opinions may be valued more than the data itself.

What does “data-driven thinking” mean to you personally? Where does it begin – at the pipeline level, in team culture, or at the product strategy stage?

In my view, if data-driven thinking is absent, you need to rethink how the entire team – and ideally the whole company – operates. The goal is to move from decisions based on personal opinions or expert intuition to decisions based on analytics, facts, and data.

Building a data-driven approach starts with people. I see two paths: top-down and bottom-up. The difference lies in where implementation begins. In the top-down scenario, leadership initiates and drives the change. In the bottom-up approach, it’s the individual contributor who identifies the best path forward and presents it to improve their area, team, or product.

Many organizations think hiring a data team is enough. Why is that not the case? Where does real data culture begin?

Hiring people doesn’t solve organizational problems by itself. All data-related initiatives must be tied to concrete business use cases. When it comes to a data team, it’s essential to set clear goals for integrating data work with company objectives. You also need a roadmap to guide the team’s efforts.

A truly data-driven process starts when a company starts thinking about long-term, high-value goals – these are exactly the kinds of goals that data analysis can help achieve. But it’s not just about having a team – it’s about data quality. You need to work iteratively to improve your datasets, collaborate with subject matter experts, and organize your work around a data-first mindset. Simply hiring a group of analysts or engineers won’t cut it.

Moreover, any data team operates in collaboration with other departments – it doesn’t exist in a vacuum. That’s why building synergy between teams is crucial. One way to do this is by using a unified data platform that integrates well with the company’s other systems.

How do you personally define “data culture”? What does it include?

To me, data culture starts with accessibility. Data should be open – not in the sense of being unprotected, but in the sense that teams should have frictionless access to the information they need during a project.

There are numerous studies showing that companies with a strong open-data culture significantly outperform those that don’t invest in data. In my opinion, accessible information enhances all business use cases, fosters innovation, and accelerates business processes.

But for data to be more than just an “asset,” and truly drive everyday decision-making across the team – what’s needed?

Experimentation with data must be encouraged – this is the main engine of innovation. It’s also essential to maintain a strong data culture, as I’ve mentioned. I recommend setting clear metrics and quality standards based on that data.

For data teams, standardized tooling and ongoing training are crucial – whether through workshops, onboarding sessions, or updates on new tools and trends. Equally important is having a leader who consistently models data-driven thinking in their decision-making.

Today, you’re responsible for POI Search, a mission-critical component at Mapbox. How is data culture implemented in such high-load systems? Is there a formal feedback loop between data, users, and engineering?

We have a concept called “customer obsession,” which means that every project in the company ultimately exists to improve the user experience. Data culture is no exception. We adhere to very high standards and build our data approach around three core principles:

- Quality: Data must be of high quality. Every decision is evaluated by whether it improves data quality.

- Accuracy: Data must correctly reflect the real world. For example, if you’ve got a perfectly formatted address – correct street, building, office number – but the coordinates point to the wrong part of town, that data isn’t very useful.

- Recency: Data must reflect the latest changes. The world is dynamic – businesses open and close, hours change, new locations appear – so data has to be kept up to date.

As for feedback loops, we have a process called “Contribute,” where users can report errors or suggest improvements. One of our key advantages is the extremely short cycle from feedback to product update. We’ve made this a strategic priority, and it’s why our metrics in this area are better than those of many competitors.

How do you think the role of the data engineer will evolve over the next five years – and what should people start developing now?

There’s a general trend in tech toward blurring the lines between roles. Most companies don’t just want a “software engineer” – they’re looking for full-stack developers who are comfortable across multiple domains. That same trend is now influencing data engineering. Today’s data engineer is expected to be part analyst, part ETL engineer, part DevOps, and part ML engineer.

My advice is to choose one area of deep specialization but also build up basic knowledge in all adjacent fields.

We’re also seeing more automation and higher levels of abstraction. There are more tools available to handle routine operations, and direct coding is becoming less central. I recommend paying attention to innovations in data observability and data monitoring – tools that help track and understand changes in your datasets. There’s a lot happening here, both in open-source and commercial solutions.

Overall, I think the next five years will be centered on data quality and monitoring. Companies will want to understand how their data is changing, and what’s driving those changes.